Spark Tutorial for Beginners

Spark实战练习之二

Guru Wednesday, December 11, 2019 1523

点击看视频

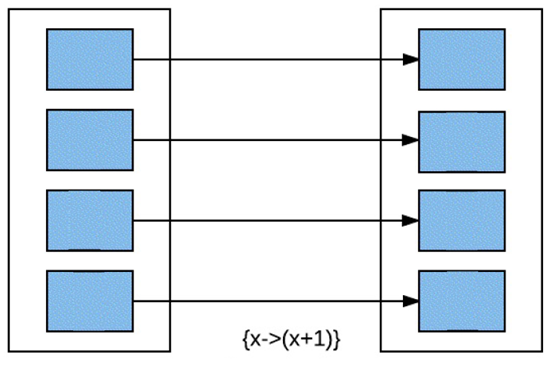

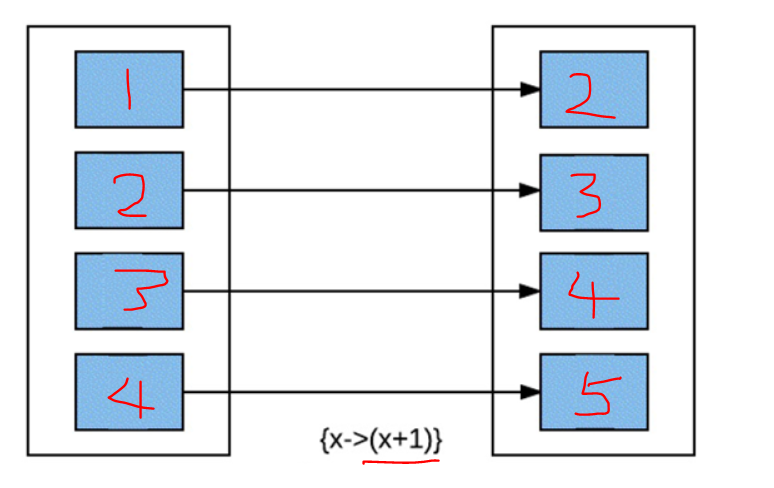

#MAP transformation

#每个元素经过计算后变成另一个元素。

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import java.util.Arrays;

import java.util.List;

public class SparkMapReduceMain {

public static void main(String[] args) throws Exception {

System.out.println("Hello World");

SparkConf sparkConf = new SparkConf()

.setAppName("Example Spark App")

.setMaster("local[*]") ;

JavaSparkContext sparkContext = new JavaSparkContext(sparkConf);

List<Integer> intList = Arrays.asList(1, 2, 3, 4);

JavaRDD<Integer> intRDD = sparkContext .parallelize( intList , 2);

JavaRDD<Integer> afterMapValue=intRDD.map(x -> x + 1);

// collect RDD for printing

System.out.println("Before MAP");

for(int value:intRDD.collect()){

System.out.println(value);

}

System.out.println("After MAP");

for(int value:afterMapValue.collect()){

System.out.println(value);

}

}

}